Using WCF for IPC in Media Center Add-Ons

Last time, I gave an overview of the design of my network browsing/copying add-on for Media Center. In this instalment, we look at how to use Windows Communication Foundation (WCF) as a means of interprocess communication (IPC) between the interactive and background portions of the add-on. Recall that each runs in a separate instance of the Media Center hosting process (ehexthost.exe); so even though the code for the add-on is contained within a single assembly, the two entry points do not share the same memory space or application domain, thus IPC is needed in order to communicate between them.

WCF is a service model (i.e. it is service-oriented) – as opposed to, say, .NET Remoting, which is a technology for instantiating objects remotely (hence it is essentially object-centric). What this means is that, rather than thinking of the problem in terms of which objects to share between the processes, we need to design a service contract which adequately describes everything we want to be able to access from the remote process. Since the interactive portion of the Media Center add-on frequently stops and starts, we consider it to be the client and the background process (which is persistent) as the service process.

Service Contract

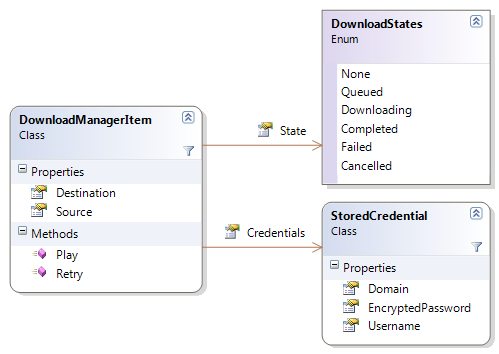

We have some specific requirements for our service:

- Enqueue an item for download

- Cancel either the current or all downloads

- Get the download history (completed/cancelled/failed items from the most recent batch)

- Determine the currently-downloading item (if present)

- Determine the current download progress

- Be able to subscribe to change notifications (for when any of the above change)

In WCF, we define the service contract by writing an interface and decorating it with the ServiceContract and OperationContract attributes, for example:

[ServiceContract]

public interface IDownloadManager {

double CurrentFileProgressRatio {

[OperationContract] get;

}

DownloadManagerItem CurrentItem {

[OperationContract] get;

}

[OperationContract]

void Enqueue(DownloadManagerItem item);

[OperationContract]

void CancelDownloads(bool all);

[OperationContract]

List<DownloadManagerItem> GetHistory();

}

The above satisfies all of our goals, except for the last one (change notifications) – this is a little more complex and will be dealt with later. The next step is to implement the interface, to provide the functionality that executes in the service process. The only noteworthy point to make here is that we must decorate the implementation using the ServiceBehavior attribute, describing how it gets instantiated:

[ServiceBehavior(InstanceContextMode=InstanceContextMode.Single, ConcurrencyMode=ConcurrencyMode.Multiple)]

public class DownloadManager : IDownloadManager {

//...

}

For our download manager service, we want to use the singleton pattern, thus we need to indicate this to WCF. The download manager happens to use multiple threads, thus we need to indicate this also.

Publishing the Service

WCF makes it very easy to actually make a service available to the client side. We merely need to select the type of endpoint we want to use, assign a URI, provide an instance of the service implementation (DownloadManager) and open the channel. Since the service process and client process reside on the same machine, the most appropriate type of endpoint to use is Named Pipes. These also allow two-way communication, which we will have to use when implementing change notification. The entire service publishing process is achieved in just 3 lines of code:

// assume there is a class member of type ServiceHost called serviceHost

serviceHost = new ServiceHost(new DownloadManager(), new Uri("net:pipe://localhost"));

serviceHost.AddServiceEndpoint(typeof(IDownloadManager), new NetNamedPipeBinding(), "DownloadManager");

serviceHost.Open();

As long as the object is held in memory (or closed using the Close() method), the service will be available to the client process.

Enabling Change Notification

As we saw in previous instalments, change notification in Media Center is achieved by extending the ModelItem class and raising notifications using the FirePropertyChanged() method. There are three main hurdles to achieving this in our multi-process design:

- Change notifications are raised on the interactive side of the add-on, and require a UI thread in order to operate.

- Services are exposed as interfaces, so we cannot extend ModelItem on the service side.

- Events (such as PropertyChanged) cannot be exposed using service contracts.

Thankfully, we can solve this by doing the following:

- Defining a class which extends ModelItem, implements IDownloadManager (our service contract) and wraps the remote instance of our service.

- Using WCF callbacks to allow the service implementation to call methods on the client side.

Implementing Callbacks

WCF callbacks work by defining a separate interface to represent methods on a client-side object that will be called by the service (which is then associated with the service contract via an additional property on the ServiceContract attribute). The client passes this object to WCF when the service is instantiated remotely. The service calls the client-side methods by retrieving the object (exposed via its interface) from WCF during a service method call. However, if we want the freedom to call client-side methods whenever we desire (i.e. outside the context of a service method call), as is required for enabling change notification, then we need to do something a little more complex…

- Enable sessions on the service contract (this is achieved via an additional property on the ServiceContract attribute). This is necessary in order to ensure that the connection established by the client process when calling one service method is persisted and used for all others – and indeed, can be used to invoke callbacks between method calls.

- Maintain a collection of connected clients and the client-side objects whose callback methods we can invoke. Even though we only think of the design in terms of service and client processes, it’s possible that a second client connection will be established before the first has been closed – hence, we must assume a 1:M relationship. This means uniquely identifying each client connection.

- Expose service methods to enable clients to formally establish/close connections. A Subscribe() method will provide the service with the client-side callback object and issue a unique ID. The Unsubscribe() method will allow the client to stop receiving callbacks (by passing the unique ID from before).

- When invoking a client-side callback, the service needs to iterate through the collection of clients. It must be prepared to catch exceptions, should a connection no longer be valid.

So, the service contract declaration changes:

[ServiceContract(CallbackContract = typeof(IDownloadManagerCallbacks), SessionMode = SessionMode.Required)]

public interface IDownloadManager { /* ... */ }

…we add the following service methods:

[OperationContract]

Guid Subscribe();

[OperationContract]

void Unsubscribe(Guid id);

…and then define the callback interface as:

public interface IDownloadManagerCallbacks {

[OperationContract]

void OnPropertyChanged(string propertyName);

}

The service implementation’s Subscribe() method obtains the callback object from the client using OperationContext:

IDownloadManagerCallbacks callback = OperationContext.Current.GetCallbackChannel<IDownloadManagerCallbacks>();

Implementing a Proxy Class

As previously indicated, change notification requires that we extend the Media Center ModelItem class and call its FirePropertyChanged() method in order to raise a notification in a manner that is thread-safe and supports object paths. When the client obtains a remote instance of the service, it is exposed only in terms of the IDownloadManager interface. Since we cannot force that object to extend from ModelItem, we need to write a wrapper/proxy class. This also enables us to implement the callback interface, IDownloadManagerCallbacks and wire up the callback method (OnPropertyChanged) to the FirePropertyChanged method from the base class.

The proxy class is implemented as follows:

public class DownloadManagerProxy : ModelItem, IDownloadManager, IDownloadManagerCallbacks {

IDownloadManager dm;

Guid id;

public void Init(IDownloadManager dm) {

this.dm = dm;

id = dm.Subscribe();

}

protected override void Dispose(bool disposing) {

if (disposing) {

if (dm != null) dm.Unsubscribe(id);

}

base.Dispose(disposing);

}

#region IDownloadManager Members

// all we do here is wrap the methods from IDownloadManager

#endregion

#region IDownloadManagerCallbacks Members

void IDownloadManagerCallbacks.OnPropertyChanged(string propertyName) {

FirePropertyChanged(propertyName);

}

#endregion

}

Now, the client process can call any method from IDownloadManager and expect it to call the corresponding service method. Additionally, it exposes the PropertyChanged event and can therefore be referenced in MCML and expect to receive change notifications whenever the service invokes the callback method.

Consuming the Service

In order to retrieve the remote instance of the service (which is then used to initialise the proxy object), the client process needs to create a channel. This channel must match the characteristics of the service endpoint, hence in this case must use Named Pipes and the URI we previously specified. A duplex channel is required in order to support callbacks. The full initialisation process is as follows:

DownloadManagerProxy proxy = new DownloadManagerProxy();

DuplexChannelFactory<IDownloadManager> factory = new DuplexChannelFactory<IDownloadManager>(

proxy,

new NetNamedPipeBinding(),

new EndpointAddress("net.pipe://localhost/DownloadManager")

);

IDownloadManager dm = factory.CreateChannel();

proxy.Init(dm);

(Exceptions should be handled, as connections could fail – most notably, this will occur in Media Center during the first few seconds after startup, when the interactive UI is available but the background process has not yet been started.)

Final Words

The above process may seem convoluted, but it represents the best practice for designing a Media Center add-on which continues to perform operations after its interactive portion has been closed by the user. Communication between the background and interactive processes is necessary, and WCF is as good a technology as any with which to achieve this. Finally, change notifications are an absolute must for Media Center objects – without them, the UI can never be as rich and automated as it otherwise could be. These concepts may seem complex at first, but one can quickly develop a pattern for implementing them in future.

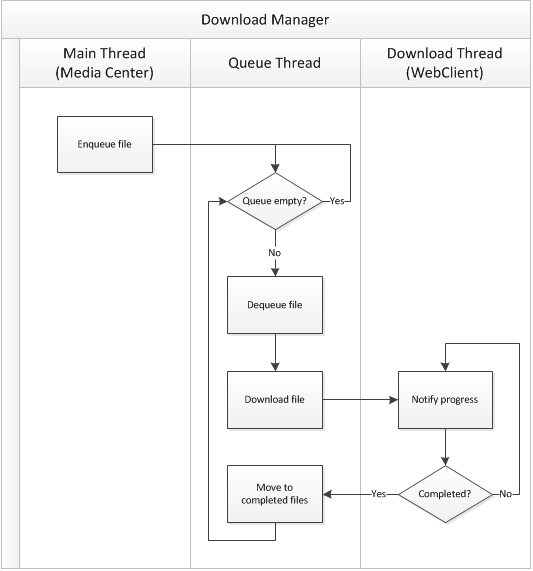

Next time, we’ll look at the implementation of the download manager itself.